Subscribe and do not miss the latest in AI and Tech. Plus Join a vibrant community. Midweek check-in, fam But while clean energy just hit a global record, why is the U.S. turning back to coal? Let’s get into it.

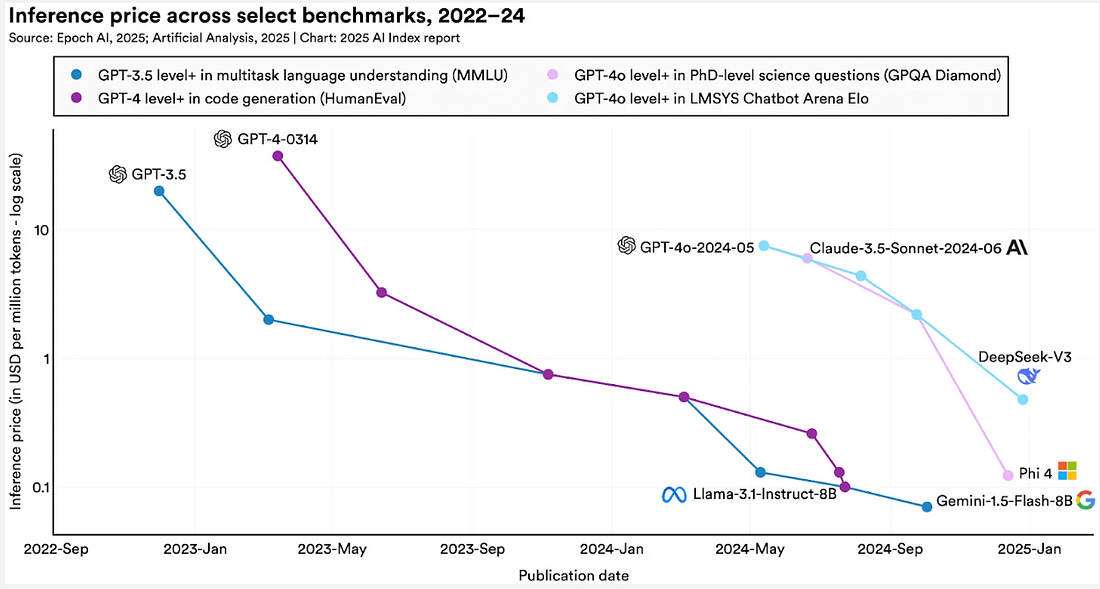

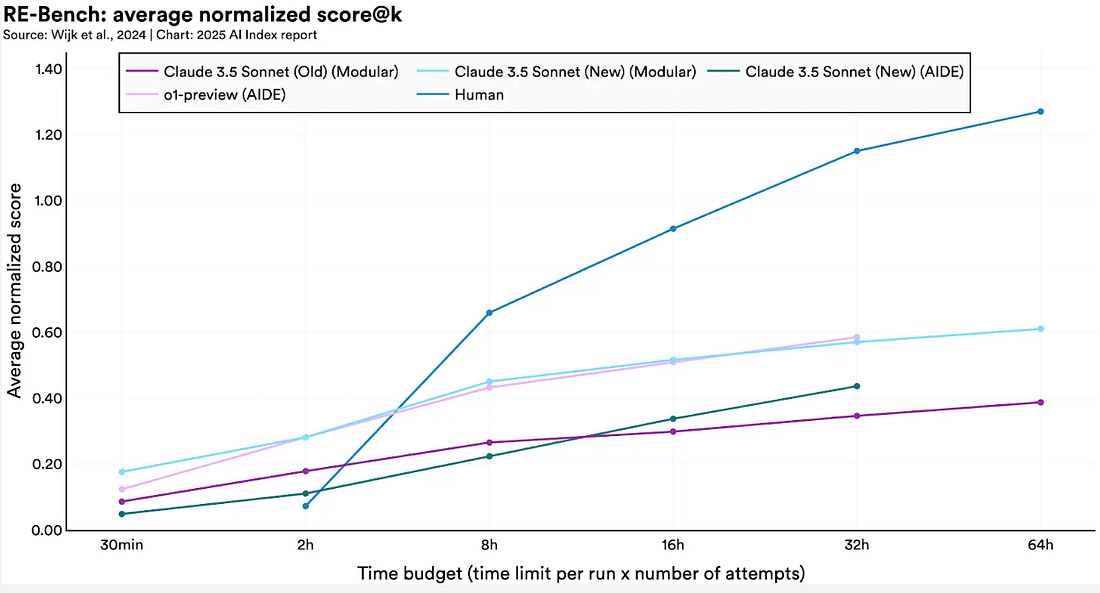

These charts show the State of AI in 2025.Stanford’s 2025 AI Index is out and reveals a maturing, more efficient AI ecosystem—with major shifts in costs, capabilities, regulation, and risks:

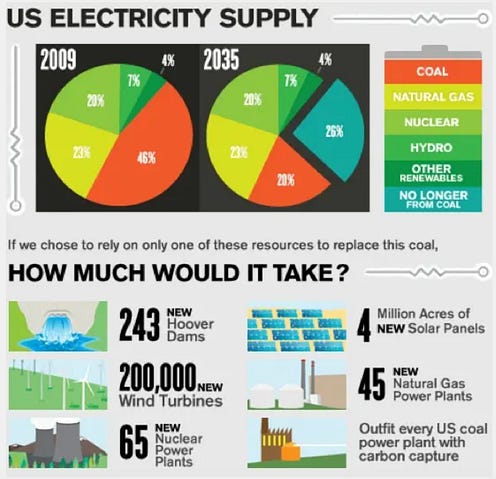

Bottom line: AI is cheaper, faster, and more integrated—but also raising concerns as adoption and incidents rise. Trump pushes coal to power AI boom.Trump signed executive orders reviving the dirty and inefficient coal industry, citing rising electricity demand from AI. The orders invoke the Defense Production Act and give the Energy Dept. emergency powers to keep coal plants open, fast-track leases on federal lands, and cut environmental regulations. Key context:

Bottom line: The new administration is banking on coal to meet AI-era power needs—despite declining demand, economic hurdles, and environmental backlash. 📰 AI News and Trends

🌐 Other Tech news

AI Red Lines: Can AI be safe by design?As artificial intelligence systems rapidly evolve in capability and autonomy, the global conversation around their governance is shifting. Increasingly, regulators and developers alike are recognizing the need to move beyond reactive safety measures and toward safety by design—ensuring AI systems are built from the ground up to be safe, aligned, and trustworthy. One promising concept gaining traction is the use of AI red lines—clear boundaries that define behaviors and uses AI must never cross. These include, for example, autonomous self-replication, breaking into computer systems, impersonating humans, enabling weapons of mass destruction, or conducting unauthorized surveillance. These red lines act as non-negotiable limits that protect against severe harms, whether caused by misuse or by AI systems acting independently. Crucially, red lines aren't just policy ideals—they are design imperatives. To be effective, they must be embedded into how AI is developed, tested, and deployed. This includes building in technical safeguards, conducting rigorous safety testing, and establishing oversight mechanisms to catch violations before they cause real-world damage. Three key qualities that make red lines meaningful. Clarity (the behavior must be precisely defined), universal unacceptability (the action must be widely viewed as harmful), and consistency (they must hold across contexts and jurisdictions). When done right, they help foster a common framework across borders, enabling responsible innovation while preventing a race to the bottom in AI safety. In high-stakes domains—such as healthcare, defense, or finance—these boundaries matter more than ever. As we enter an era where AI systems influence real-world decisions and outcomes, red lines offer a way to protect society while still unlocking AI’s potential. In doing so, they help shift the focus from fixing harm after the fact to building technology that won’t cause it in the first place. But how viable is this model in practice? Can we realistically define universal red lines in a world where ethical norms, legal frameworks, and technological capabilities vary widely across countries and contexts? Who gets to decide what’s “unacceptable,” and how do we ensure that enforcement mechanisms are both effective and fair—without stifling innovation? These are open questions that challenge the simplicity of the red line concept and highlight the need for inclusive, globally coordinated approaches to AI governance. 🧰 AI ToolsDownload our list of 1000+ Tools free 🚀 Showcase Your Innovation in the Premier Tech and AI Newsletter (link) As a vanguard in the realm of technology and artificial intelligence, we pride ourselves in delivering cutting-edge insights, AI tools, and in-depth coverage of emerging technologies to over 55,000+ tech CEOs, managers, programmers, entrepreneurs, and enthusiasts. Our readers represent the brightest minds from industry giants such as Tesla, OpenAI, Samsung, IBM, NVIDIA, and countless others. Explore sponsorship possibilities and elevate your brand's presence in the world of tech and AI. Learn more about partnering with us. You’re a free subscriber to Yaro’s Newsletter. For the full experience, become a paying subscriber. Disclaimer: We do not give financial advice. Everything we share is the result of our research and our opinions. Please do your own research and make conscious decisions. |

Wednesday, April 9, 2025

📊These Charts Show the State of AI in 2025.

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment