💭 Understanding AI Hallucinations and How to Mitigate Them.Plus: Nvidia Tops Market Value Charts, Yet CEO Eyes Risks in Tech Shift.Get in Front of 50k Tech Leaders: Grow With Us Hello Team, This is our midweek Update: Today, we delve into a practical tutorial on preventing or reducing AI hallucinations. Plus, Nvidia claims the top spot as the world’s most valuable company, and we explore the perils of social media alongside a leading surgeon's efforts to raise awareness. Your feedback is invaluable to us—please keep sharing your thoughts, ideas, and suggestions. Thank you!

📰 AI News and Trends

🌐 Other Tech news

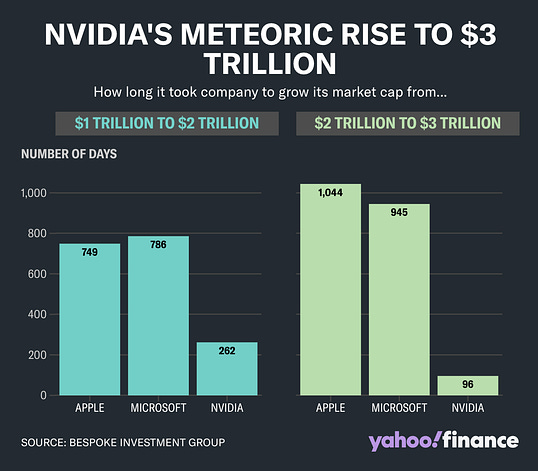

Understanding AI Hallucinations and How to Mitigate Them.AI hallucinations refer to the phenomenon where chatbots and other AI models generate incorrect or fictional information. This occurs because these models, including large language models like GPT, generate responses based on patterns in data rather than retrieving facts from a definitive source. They're designed to predict the next word in a sequence, making things up as they go, which can lead to inaccuracies or entirely fabricated responses. We have come up with a set of Tools and Strategies to Reduce AI Hallucinations: 1. Chain-of-Thought Prompting: This involves asking the AI to break down its thought process step-by-step before delivering a final answer, which can help in tracking the logic behind its conclusions and identifying potential errors. Adding: “Explain your reasoning step by step” to your prompt will help with this process. 2. Explicit Instruction in Prompts: Clearly specify in your prompts that the AI should rely on known facts or explicitly state when it does not know the answer, which can discourage the model from making things up. 3. Use Explicit Instructions: Direct the AI to indicate when it's uncertain or when the information should be verified. This can prevent the model from presenting guesses as facts. 4. Be Aware that AI Will Hallucinate: Be aware about the limitations of AI, including its propensity to create plausible but incorrect information. Understanding these limitations helps critically assess AI responses. 5. Error Rate Monitoring: Keep track of the AI's performance and error rates. This helps in understanding the types of mistakes it is prone to making and adjusting strategies accordingly. 6. Regular Feedback: Provide feedback on AI outputs. Most platforms have mechanisms to report inaccuracies, helping improve model performance over time. 7. Limiting AI Autonomy in Sensitive Areas: In areas where accuracy is crucial, such as legal or healthcare information, limit the AI's autonomy and ensure human oversight. 8. Implement Checks and Balances: Where possible, cross-verify AI-generated information with reliable sources. This is particularly important for factual claims or data-driven decisions. By employing these tools and techniques, users can better manage AI outputs and mitigate the risk of hallucinations, leading to more reliable and trustworthy AI interactions. Nvidia Tops Market Value Charts, Yet CEO Eyes Risks in Tech Shift.Nvidia has become the world's most valuable public company, reaching a $3.34 trillion market cap, surpassing Microsoft and Apple. This surge is driven by its 80% market share in AI chips for data centers, with recent quarterly data center revenue jumping 427% to $22.6 billion. Despite this success, CEO Jensen Huang is pivoting Nvidia towards software and cloud services to mitigate potential declines in hardware demand. This shift includes launching DGX Cloud, which rents out Nvidia-powered servers directly to customers. However, this strategy places Nvidia in direct competition with major clients like AWS and Microsoft and has sparked tensions due to slow data center expansions and Nvidia's control over server setups. This strategic pivot aims to secure Nvidia's future in the evolving tech landscape while navigating complex relationships with major industry players. 🧰 AI Tools Of The Day.Legal Assistant

Surgeon General Advocates Warning Labels on Social Media.Dr. Vivek H. Murthy, the U.S. Surgeon General, is calling for mandatory warning labels on social media platforms to address the mental health risks for adolescents, we personally believe that adults should lower the social media consumption as well for the same reasons. He highlights the urgency of the mental health crisis among youth, noting that adolescents who use social media for more than three hours daily have twice the risk of developing anxiety and depression. The average daily usage among youths was 4.8 hours as of summer 2023. The proposed warning labels would inform users of the potential dangers, akin to tobacco warning labels, which have proven effective in changing behavior. Murthy also emphasizes the need for broader legislative and community action to make social media safer for young users. 🚀 Showcase Your Innovation in the Premier Tech and AI Newsletter (link) As a vanguard in the realm of technology and artificial intelligence, we pride ourselves in delivering cutting-edge insights, AI tools, and in-depth coverage of emerging technologies to over 55,000+ tech CEOs, managers, programmers, entrepreneurs, and enthusiasts. Our readers represent the brightest minds from industry giants such as Tesla, OpenAI, Samsung, IBM, NVIDIA, and countless others. Explore sponsorship possibilities and elevate your brand's presence in the world of tech and AI. Learn more about partnering with us. You’re a free subscriber to Yaro’s Newsletter. For the full experience, become a paying subscriber. Disclaimer: We do not give financial advice. Everything we share is the result of our research and our opinions. Please do your own research and make conscious decisions. |

Wednesday, June 19, 2024

💭 Understanding AI Hallucinations and How to Mitigate Them.

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment